How to Write Software Test Plan: A Practical Guide

Before you can even think about writing a single test case, you need a software test plan. This isn't just about defining the scope or crafting a strategy; it's the core document that gets your entire team on the same page about quality. It clearly answers what to test, how to test it, and maybe most importantly, what "done" actually looks like.

Why a Test Plan Is More Than Just Paperwork

I’ve seen too many projects treat the test plan as a box-ticking exercise. That’s a huge mistake. A great test plan isn't bureaucratic red tape; it's the strategic compass that guides your project to a successful launch. It becomes the single source of truth that shuts down scope creep, manages stakeholder expectations, and saves a ton of time and money by catching issues before they blow up.

Think of it as the foundational agreement that ensures developers, project managers, and clients are all working toward the same definition of quality.

The Blueprint for Quality Assurance

Would you build a house without a blueprint? Of course not. The result would be chaos. A software project without a test plan runs the exact same risk. This document is what turns abstract business requirements into concrete, testable actions, creating a vital communication bridge for everyone involved.

For instance, a project manager might look at the plan and immediately spot that the testing timeline is way too tight, giving them a chance to adjust early. A developer can see which specific features are about to get hammered in testing, which helps them focus their own efforts.

Driving Alignment Across Teams

Miscommunication is a classic project killer. It’s so easy for different teams to operate on completely different assumptions about what needs to be delivered. The test plan forces those critical conversations to happen right at the start.

A well-structured test plan is not just about finding bugs; it’s about building a shared understanding of quality. It aligns technical and non-technical stakeholders on objectives, risks, and responsibilities, turning the quality assurance process from a siloed activity into a collaborative mission.

This alignment is absolutely essential when you're working with distributed or nearshore teams. A clear, documented plan makes sure a QA engineer in another time zone has the exact same context as the product owner sitting in the main office. That alone cuts down on a huge amount of ambiguity and rework.

A Proactive Approach to Risk Management

Crafting a solid software test plan has become a cornerstone of successful development for a reason. A thoughtful plan can reduce project risks by up to 30% and seriously improve software reliability simply by ensuring you have complete test coverage. With over 73% of organizations now blending manual and automated testing, the plan is the central hub where you define how that mix will work. You can find more insights about effective test planning from recent industry research.

Ultimately, by getting ahead of potential problems—like an unavailable test environment or a dependency on a flaky third-party API—you can build mitigation strategies before they derail the project. This foresight is what shifts QA from a reactive, bug-hunting exercise into a proactive, quality-building discipline.

2. Setting Clear Objectives and Defining Scope

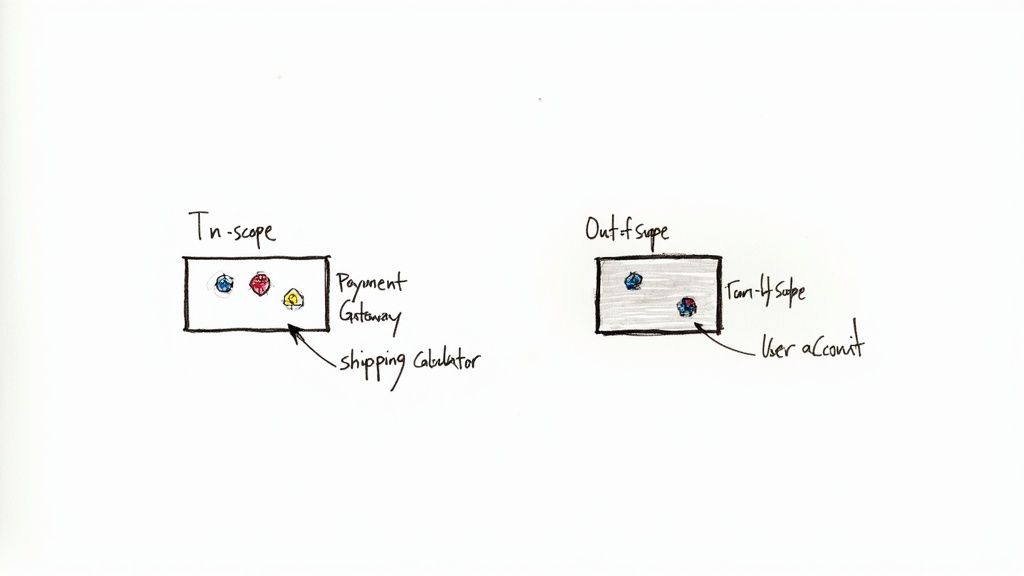

Every great test plan starts by drawing a firm line in the sand. This is where you decide exactly what you're going to test and, just as importantly, what you're not going to test. Without this clarity, you're opening the door to scope creep, wasted hours, and a frustrated team.

Think of it as transforming a fuzzy business goal into a crystal-clear mission for your QA engineers. This step ensures everyone’s efforts are aimed at what truly matters for the release, preventing them from chasing down bugs in features that aren't even part of the current update. If you need a refresher on the basics, getting a handle on how to define project scope is a great starting point: https://getnerdify.com/blog/how-to-define-project-scope.

From Business Goals to Testable Objectives

The first job is to translate high-level project requirements into specific, measurable goals for your testing. A vague goal like "test the new checkout feature" is a recipe for confusion. We need to be much more precise.

A good starting point is to make sure the requirements themselves are solid. This guide to testing software requirements is a valuable resource for making sure you're building your plan on a strong foundation.

Let's stick with our e-commerce checkout example. Instead of that vague goal, we can break it down into concrete objectives:

- Objective 1: Verify that a customer can complete a purchase using every supported payment method (e.g., Visa, PayPal, Apple Pay).

- Objective 2: Confirm shipping costs are calculated correctly for our top 5 shipping destinations based on item weight.

- Objective 3: Ensure the order confirmation email is sent within 60 seconds of a successful transaction and contains accurate order details.

See the difference? These objectives are specific and actionable. They give the QA team a clear finish line to run towards.

Don't Forget to Define What's "Out-of-Scope"

I've seen more projects get derailed by unspoken assumptions than almost anything else. Clearly stating what you won't be testing is one of the most powerful things you can do to protect your timeline. It builds a fence around your team's work, keeping distractions out.

For our e-commerce checkout, the out-of-scope list is crucial for focus:

- User Account Creation Flow: Handled by a separate team and covered in their test plan.

- Product Recommendation Engine: While it appears in the cart, its logic is not part of this release.

- Third-Party Shipping Carrier APIs: We'll confirm our system sends the correct data to them, but we aren't testing the internal workings of FedEx's or UPS's systems.

Pro Tip: Documenting what's out-of-scope isn't just for the testers. It's a critical communication tool that forces early alignment with stakeholders. It gets everyone in a room to agree on priorities before the work starts, heading off those "But I thought you were testing..." conversations later.

By setting these firm boundaries, you give your team the focus they need to be effective. Everyone from the product owner to the newest developer understands what "done" looks like for this testing cycle. This is how a simple document becomes a roadmap for delivering quality software.

Crafting Your Core Test Strategy

Think of your test strategy as the heart of your entire test plan. It’s not just a list of tasks; it’s the high-level game plan that answers the fundamental question: "How are we actually going to prove this software works and is ready for users?" This is where you define the types of testing you’ll run and decide the crucial balance between manual and automated efforts.

A cookie-cutter approach just won't cut it. The real goal here is to build a strategy that directly targets your project's biggest risks, not just to check boxes on a generic template.

What Kinds of Tests Will You Run?

Picking the right combination of tests is essential. You want to achieve deep coverage without wasting time and resources on things that don't matter for this specific project. As you start outlining your strategy, it's a great idea to explore various quality assurance testing methods to make sure you're not missing any critical angles.

Let's get practical. Imagine you're building a new mobile banking app. Your strategy would laser-focus on a few key areas:

- Functional Testing: This is non-negotiable. It proves that the core features—logging in, checking a balance, transferring money—do exactly what they're supposed to. No exceptions.

- Security Testing: For a banking app, this is mission-critical. Your strategy absolutely must include penetration testing and vulnerability scans to safeguard user data. This is where you protect your users and your reputation.

- Performance Testing: What happens on payday when thousands of users log in at the same second? Performance and load testing will answer that, ensuring your app stays snappy and reliable under pressure.

- Usability Testing: Can a new user intuitively figure out how to transfer funds? Getting the app in front of real people for usability testing provides priceless feedback on the user experience.

For that same app, you might decide to deprioritize extensive cross-browser compatibility testing. Why? Because it’s a native mobile app, and your resources are better spent on the high-risk areas like security and performance.

The Manual vs. Automation Decision

This isn't an "either/or" battle; it's about smart allocation. Your strategy needs to clearly define which tests need a human touch and which are perfect for a machine.

My rule of thumb is this: automation is for repetitive, predictable tasks. Manual testing is for anything that requires human intuition, creativity, or exploration.

Here’s how that breaks down:

Perfect for Automation:

- Regression Tests: Running hundreds of checks on existing features every time new code is pushed? That’s a job for automation.

- Performance Tests: You can't ask 5,000 people to log in at once, but an automation script can simulate it perfectly.

- Data-Driven Tests: Checking a single function with hundreds of different inputs is soul-crushing for a human but trivial for a script.

Best Kept Manual:

- Exploratory Testing: This is where a skilled tester tries to "break" the app with unscripted, creative approaches. It’s about curiosity, not just following a script.

- Usability Testing: You need a person to tell you if an interface feels clunky or confusing. A script can't gauge frustration.

- New, Unstable Features: When a feature is brand new and the requirements are still shifting, manual testing offers the flexibility you need. Writing automation scripts at this stage is often a waste of time.

To help structure all these moving parts, our complete software testing checklist is a fantastic resource for making sure you’ve covered all your bases.

Putting Your Strategy on Paper

To help you decide which test types make sense for your features, here's a quick comparison.

Choosing the Right Test Type for Your Feature

| Test Type | Primary Goal | Example Application (for a login feature) | Best Suited For |

|---|---|---|---|

| Functional | Verify the feature works as specified. | Can a user log in with valid credentials? | All core features and user flows. |

| Security | Protect against vulnerabilities and attacks. | Can someone bypass the login with an SQL injection? | Features handling sensitive data (PII, financial info). |

| Performance | Ensure speed and stability under load. | How quickly does the login process complete with 1,000 users? | High-traffic features or critical system processes. |

| Usability | Assess ease of use and user experience. | Is the "Forgot Password" link easy for users to find? | User-facing interfaces, especially for new or complex features. |

This table is just a starting point, but it shows how different testing types serve different purposes, even for something as simple as a login screen.

Once you’ve settled on the "how," document it clearly in the test plan. The old way of writing test plans is long gone. Modern plans are leaner and more focused. Industry data actually shows that 80% of organizations now view components like scope, timelines, resources, and the test strategy as essential for successful planning.

Your test strategy shouldn’t be a novel. It should be a concise, actionable guide for your team. Clearly state the types of testing you'll do, the tools you'll use, and your reasoning for the manual vs. automation split.

This section becomes the roadmap for your entire QA team. When it's done right, everyone understands the mission and works toward the same definition of quality, turning your test plan from a document into a powerful tool for building great software.

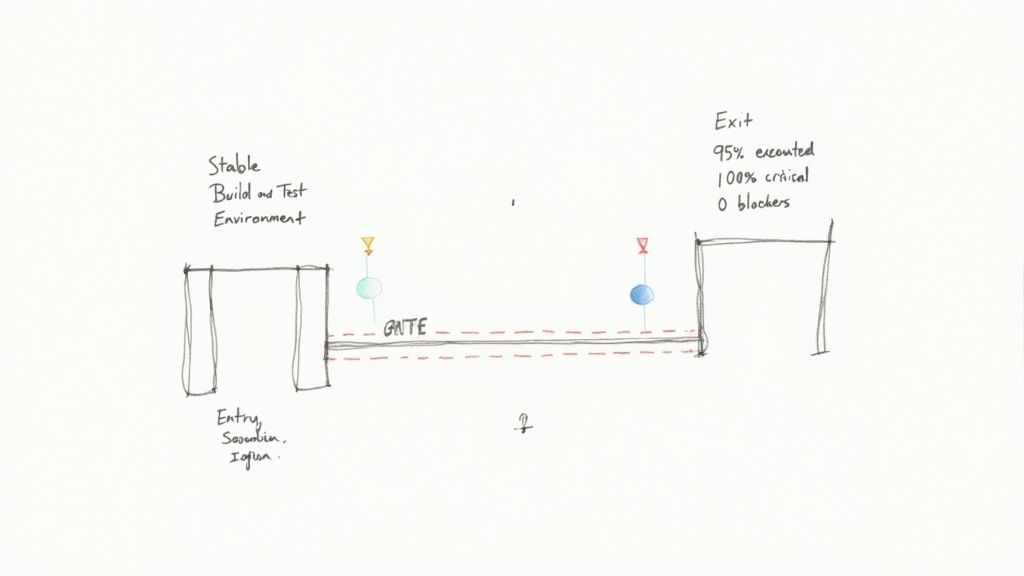

Setting Clear Entry and Exit Criteria

So, when is it actually time to start testing? And maybe even more important, how do you know when you're done? Without clear, data-driven goalposts, testing can quickly devolve into a never-ending cycle of "just one more fix." This is precisely why entry and exit criteria are some of the most practical, non-negotiable parts of your software test plan.

Think of these criteria less as suggestions and more as the firm rules of engagement for your entire QA process. They give you concrete, measurable milestones that eliminate ambiguity and get everyone on the same page about what "ready" and "finished" truly mean.

Defining Your Green Light to Start

Entry criteria are the specific prerequisites that absolutely must be met before a single test case is run. It’s your pre-flight checklist; you wouldn’t take off without running through it first. Kicking off testing before these conditions are met is a recipe for wasted time, forcing your team to wrestle with unstable code or environmental issues instead of finding real bugs.

Here’s what a solid set of entry criteria might look like for a new feature release:

- Code Complete and Deployed: The development team has signed off that all planned features are coded and pushed to the designated test environment.

- Test Environment Stability: The QA server, databases, and any necessary third-party API integrations are confirmed to be online and functional.

- Core Functionality Passes Smoke Tests: A basic, automated smoke test suite runs successfully, confirming the build isn't dead on arrival and is stable enough for deeper testing.

- Test Cases Reviewed and Ready: All test cases for the current testing cycle have been written, peer-reviewed, and approved.

Trying to test before these boxes are ticked is just inefficient. It results in false negatives—tests failing because of infrastructure problems, not actual software defects—which creates a ton of confusion and rework for everyone.

Entry criteria are there to protect your QA team's time and focus. By establishing a clear "starting line," you ensure that when testing begins, the team can dive straight into evaluating software quality, not troubleshooting the setup.

Knowing When to Stop Testing

Just as important as knowing when to start is knowing when you can confidently stop. Exit criteria define the exact moment a testing phase is complete and the software is ready for the next stage, whether that’s UAT or a full production release. These have to be specific, measurable, and agreed upon by all stakeholders.

Fuzzy goals like "most tests passed" don't cut it. You need hard numbers. Here's a real-world example of exit criteria for a new e-commerce checkout feature:

- Execution Rate: 98% of all planned test cases have been executed.

- Pass Rate: A minimum of 95% of all executed tests are in a "Passed" state.

- Critical Defects: 100% of test cases marked as "Critical" or "High Priority" must pass. No exceptions.

- Open Bugs: There are zero open "Blocker" or "Critical" defects. No more than five "Major" defects can remain open, and each must have a documented, approved workaround.

These metrics create a clear finish line. Once these conditions are met, the team can declare the testing phase complete, giving stakeholders data-backed confidence that the software is truly ready for launch.

Planning Your Resources, Schedule, and Deliverables

A brilliant test strategy on paper is one thing; making it happen is another. This is where we get down to the brass tacks—the who, what, and when. A test plan without this logistical backbone is just a document gathering dust. To make it a living, breathing guide for your team, you have to nail down your resources, map out a realistic schedule, and be crystal clear about what you'll deliver at the end.

This is the operational heart of your plan. It’s what turns your high-level strategy into a day-to-day roadmap for your QA team, ensuring everyone knows their part, every task has a deadline, and the final results are understood by everyone.

Getting the Right Team and Tools in Place

First things first: who and what do you need to get the job done? This isn't just about making a list of QA engineers. It’s about building a complete quality ecosystem.

You have to think about the people involved. Who’s on point for what? Getting this clarity upfront prevents crucial tasks from falling through the cracks.

- QA Lead: The owner of the test plan. They’re the conductor of the orchestra, coordinating all testing activities and acting as the main point of contact.

- QA Engineers (Manual & Automation): These are the folks in the trenches writing test cases, executing tests, and building out the automation scripts.

- Developers: Their involvement doesn't end when the code is committed. You need to account for their time for bug fixes and for picking their brains on technical details.

- Product Manager/Owner: The source of truth for requirements. They’re essential for clarifying ambiguities and, ultimately, for signing off during user acceptance testing (UAT).

Once the team is sorted, look at your toolset. The right software makes everything smoother, from communication to data collection.

- Test Management: You need a home for your test cases and a way to track execution. Tools like TestRail or Zephyr are perfect for this.

- Bug Tracking: This is your central nervous system for defects. A system like Jira or Asana ensures bugs are logged, triaged, and tracked from discovery to resolution.

- Automation Framework: The engine that runs your automated tests. This could be something like Selenium for web apps or Appium for mobile.

Building a Timeline That Actually Works

One of the most common traps I see is a testing schedule created in a bubble. For a timeline to be worth anything, it has to be woven into the overall project plan, respecting development milestones and the hard release date.

Start by breaking the work into distinct phases and putting realistic timeframes on them. Here’s a rough idea of what that might look like:

- Test Case Design: Give the team 3 days after the feature is "dev complete."

- Initial Functional Testing: Plan for a 5-day cycle once you have a stable build.

- Automated Regression: This should only take about 1 day and can run alongside bug-fix verification.

- Bug Fix Verification: Set aside 2 days after the first big testing push.

- Final Sanity Check: You'll need about 4 hours right before the release goes out the door.

A schedule isn't just a collection of dates—it's a critical communication tool. Make sure you share it widely with development and project management. This gets everyone aligned on dependencies and prevents that classic end-of-sprint squeeze where QA time is the first thing to get cut.

And please, always build in a buffer. A nasty bug found at the eleventh hour can torpedo a tight schedule. A contingency of 10-15% isn't pessimistic; it's just smart, real-world planning.

Defining What You'll Actually Deliver

Finally, your test plan needs to spell out exactly what artifacts you'll produce. This isn't just busywork; it's about managing stakeholder expectations and providing concrete proof of your team's efforts. These deliverables are the tangible outputs of your entire process.

Keep your list of deliverables straightforward and clear:

- The Test Plan Document: The foundational document we're creating right now.

- Test Cases & Suites: The detailed, step-by-step instructions for every test.

- Test Execution Logs: A running record of which tests were run, who ran them, and the pass/fail results.

- Defect Reports: Individual, detailed reports for every bug, complete with steps to reproduce, severity, and priority.

- Final Test Summary Report: A high-level dashboard for leadership. It should cover what was tested, pass/fail rates, any open critical defects, and a final go/no-go recommendation for the release.

By clearly laying out the team, tools, timeline, and deliverables, you create an operational framework that makes your test plan truly work. This is the detailed planning that ensures your QA process runs like a well-oiled machine.

Managing Risks and Aligning Stakeholders

Let's be honest: no project goes exactly as planned. Something always comes up. A great test plan doesn’t ignore this reality; it embraces it. This is where we move from planning the "happy path" to figuring out what to do when things inevitably go sideways.

Thinking about potential roadblocks isn’t about being negative. It’s about being a realist and, more importantly, being prepared. When you spot potential issues early on, you can map out a response that stops a small hiccup from turning into a full-blown crisis that derails your release.

Identifying and Mitigating Common Testing Risks

A solid risk analysis is the backbone of any test plan that's worth its salt. It’s really just a structured way of brainstorming what could go wrong with your testing and deciding on a game plan for each scenario. This proactive step is a huge part of good software project risk management.

I’ve seen a few common risks pop up time and time again. Here’s what they look like and how you can get ahead of them:

- Shrinking Timelines: This one’s a classic. The deadline gets moved up, and suddenly you have half the time. The best defense is to prioritize your test cases with a vengeance. Focus only on the absolute must-have, critical-path functionality and push the "nice-to-have" tests to a later cycle.

- The Test Environment Vanishes: Nothing stops a QA team in its tracks faster than a down environment. You can reduce this risk by scheduling regular health checks on your test servers and having a direct line to the DevOps or IT team for quick fixes.

- A Key Person Is Out: Your star automation engineer calls in sick the day before a major test run. What now? The solution is to cross-train your team and make sure all critical scripts and processes are well-documented so someone else can step in.

A risk is just a possibility until it becomes a reality. Your mitigation plan is your insurance. Writing these down shows everyone you've thought things through and are prepared for more than just the best-case scenario.

A Checklist for Stakeholder Alignment

A test plan that nobody reads or agrees with is just a document taking up space. Its real value comes from getting everyone on the same page. Getting buy-in from developers, product owners, and business leaders isn't optional—it's essential for the whole thing to work.

This is even more critical when you're working with nearshore or distributed teams. A well-defined plan acts as a single source of truth, making sure everyone is working toward the same goals, no matter what time zone they're in.

Use this checklist to make sure you have solid alignment before you kick things off:

- Scope and Objectives Review: Grab the product owner and project manager and walk them through what's in scope and, just as importantly, what's out of scope. Are we all agreed on the focus?

- Timeline and Milestone Confirmation: Show the testing schedule to the development lead. Do they see any potential bottlenecks or dependencies that could throw a wrench in the works?

- Exit Criteria Agreement: This is a big one. You need explicit sign-off from the business stakeholders on bug tolerance. What do they consider an acceptable number of open Major or Minor defects before the software can go live?

- Roles and Responsibilities Clarity: Make sure everyone, including developers who will be on deck for bug fixes, knows what's expected of them and how much of their time will be needed during the testing phase.

The goal here isn't to have more meetings. It's about having quick, focused conversations to get a firm handshake on the plan. Once you have that, your software test plan becomes more than a document—it becomes a shared promise to deliver a quality product.

Common Questions About Software Test Plans

Even with a solid guide, a few questions always seem to surface when teams are putting together a test plan. Let's tackle some of the most common ones I've run into over the years to help you put these ideas into practice.

Test Plan vs. Test Strategy: What's the Real Difference?

This is, without a doubt, the number one point of confusion. It's easy to see why they get mixed up, but the distinction is crucial.

Think of your test strategy as the high-level, long-term rulebook for your entire organization's approach to quality. It's a foundational document that doesn't change much from project to project. It answers big, directional questions like:

- What's our overall philosophy on test automation?

- What are the non-negotiable quality standards we hold across all products?

- How do we assess and categorize risk at a company level?

A software test plan, on the other hand, is the tactical, project-specific blueprint. It takes the principles from the strategy and applies them to a single, specific release or feature.

For instance, your strategy might state, "We automate all critical-path regression tests." The test plan for your next release would translate that into, "For the v2.4 launch, we will automate the 50 core regression tests and manually verify the new UI components by May 15th." It gets specific.

Here's an analogy I like: The strategy is your quality constitution. The test plan is the specific law you write for a new situation, making sure it always follows the principles laid out in that constitution.

Can You Use a Test Plan in an Agile Environment?

Of course. But it’s not the massive, 50-page tome you’d see in a traditional waterfall project. Creating a rigid, exhaustive document upfront completely misses the point of Agile, which is all about adapting to change.

In an Agile world, test planning is leaner and happens in layers.

- You start with a lightweight master test plan. This document sketches out the big picture: the overall project strategy, the tools you'll use, and the ultimate quality goals.

- Then, for each sprint, you create smaller, more focused sprint-specific test plans. These dive into the details for just the user stories in that particular development cycle.

The whole idea is to keep the documentation just-in-time and collaborative. It's a living guide that gets tweaked and refined after every retrospective, not a contract signed in blood at the start of the project.

What Are the Biggest Mistakes to Avoid?

I've seen a few common missteps that can derail an otherwise solid test plan. The absolute biggest one is treating the plan as a "write it and forget it" task. A test plan that isn't updated as the project pivots is worse than having no plan at all.

Another classic mistake is being too vague. Statements like "ensure a high pass rate" are useless. Get concrete. Define exactly what success looks like—for example, a "95% test case pass rate" for new features before they can be merged.

Finally, a test plan created in a silo is doomed. If you don't get genuine buy-in from developers, product owners, and other stakeholders, it’s just a document. When it becomes a shared agreement that everyone feels ownership over, that’s when it truly works.