Top 10 Enterprise Application Integration Best Practices for 2026

In today's interconnected business environment, the ability to seamlessly link disparate systems is no longer a luxury-it's a core competitive advantage. Effective enterprise application integration (EAI) is the backbone of modern business agility, enabling real-time data flow, unified customer experiences, and streamlined operations across a complex ecosystem of on-premise software, SaaS platforms, and custom applications.

However, achieving a truly cohesive digital infrastructure is fraught with challenges. Many organizations struggle with brittle point-to-point connections, persistent data silos, and mounting technical debt that stifles innovation. Without a clear strategy, integration projects can quickly become a tangled web of dependencies that are difficult to manage, secure, and scale. The consequences are significant: inefficient workflows, poor decision-making due to inconsistent data, and an inability to adapt to market changes.

This guide cuts through the noise and delivers a prioritized, actionable roadmap. We've compiled a definitive roundup of the top 10 enterprise application integration best practices that leading companies are using to build future-proof systems. This isn't just theory; it's a practical guide with implementation details covering everything from API-first design and microservices patterns to security, governance, and observability. You will learn how to:

- Design scalable and resilient integration architectures.

- Select the right tools, including iPaaS and ESBs.

- Implement robust data management and security protocols.

- Establish effective governance and monitoring for your entire integration landscape.

Let's dive into the patterns and principles that will transform your integration strategy from a technical challenge into a powerful business enabler.

1. API-First Architecture and Design

An API-first architecture fundamentally shifts the development paradigm by prioritizing the design and creation of APIs before any backend logic or user interfaces are built. Instead of treating APIs as an afterthought, this approach establishes them as the central "contract" that governs how different applications, services, and systems interact. By defining the API specification first, teams create a clear, stable interface that enables parallel development, simplifies integration, and ensures a consistent experience for all consumers, including internal teams, partners, and third-party developers.

This strategy is a cornerstone of modern enterprise application integration best practices because it decouples services, promotes reusability, and accelerates innovation. For instance, Stripe's comprehensive payment API allows thousands of platforms to seamlessly integrate complex financial services, while Twilio's API-first platform has become the standard for embedding communication features into applications. These companies built their empires by treating their APIs as first-class products.

Key Implementation Steps

To effectively adopt an API-first approach, organizations should focus on several critical actions that create a robust and scalable integration framework.

- Define a Clear Contract: Use specifications like OpenAPI (formerly Swagger) to create a detailed, machine-readable definition of your API. This contract outlines endpoints, data structures, authentication methods, and error responses, serving as the single source of truth for all development teams.

- Establish Strong Governance: Create and enforce enterprise-wide API governance policies. These standards should cover everything from naming conventions and versioning strategies (e.g., semantic versioning) to security protocols and performance benchmarks, ensuring consistency across all integrated services.

- Prioritize Documentation: Generate comprehensive, interactive documentation directly from your API specification. Include clear explanations, code samples in multiple languages, and a "try-it-out" feature to significantly reduce the integration effort for developers. You can find more details in our complete guide to API design best practices.

- Implement Robust Security: Secure your APIs from the start by implementing rate limiting to prevent abuse, using OAuth 2.0 or JWT for authentication, and enforcing strict access control policies to protect sensitive enterprise data.

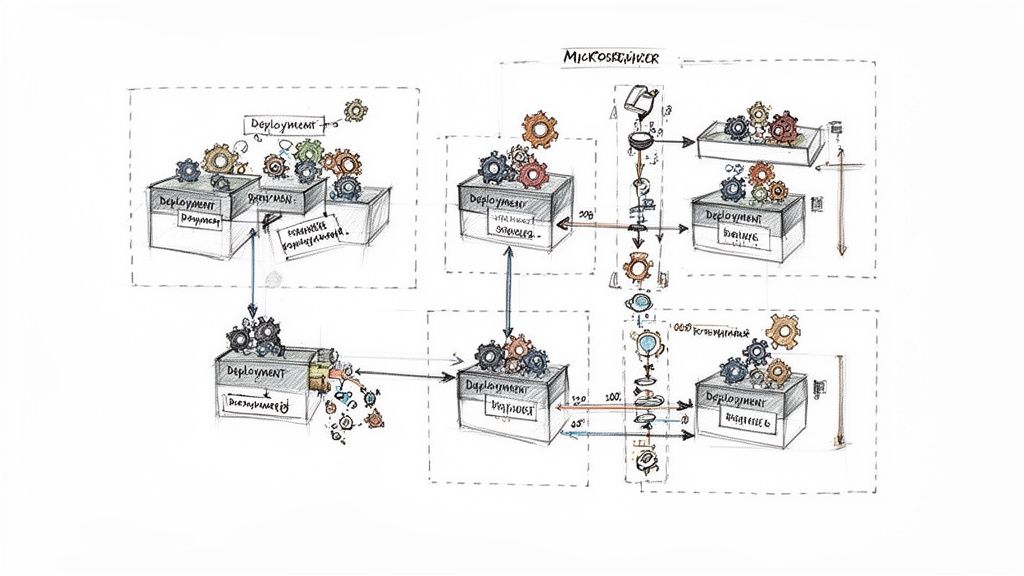

2. Microservices Architecture Pattern

A microservices architecture pattern breaks down a monolithic enterprise application into a collection of smaller, independently deployable services. Each service is designed around a specific business capability and communicates with others through well-defined, lightweight APIs. This decentralized approach allows teams to develop, deploy, and scale individual components without impacting the entire system, fostering agility and resilience.

This modular structure is a fundamental pillar of modern enterprise application integration best practices because it enhances flexibility and accelerates development cycles. For example, Netflix famously transitioned from a monolith to hundreds of fine-grained microservices, enabling them to innovate rapidly and serve over 200 million subscribers globally. Similarly, Amazon's retail platform uses this pattern to manage distinct services like inventory, payments, and order fulfillment, allowing each to scale independently based on demand.

Key Implementation Steps

Successfully transitioning to or building a microservices architecture requires a strategic approach focused on clear boundaries, robust communication, and operational excellence.

- Define Service Boundaries with Domain-Driven Design (DDD): Use DDD principles to identify logical business domains and define clear boundaries for each microservice. This ensures services are loosely coupled and highly cohesive, preventing them from becoming a distributed monolith.

- Implement an API Gateway: Deploy an API Gateway as a single entry point for all client requests. This gateway handles cross-cutting concerns like authentication, rate limiting, and request routing, simplifying the client-to-service communication model.

- Leverage Containerization and Orchestration: Use tools like Docker to containerize services and Kubernetes to orchestrate their deployment, scaling, and management. This standardizes the operational environment and automates complex lifecycle tasks. A deep dive into this topic is available in our guide to ecommerce microservices architecture.

- Establish Asynchronous Communication: Employ event-driven patterns and message brokers (e.g., RabbitMQ, Apache Kafka) for inter-service communication. This decouples services and improves system resilience by allowing them to operate even when other services are temporarily unavailable.

3. Enterprise Service Bus (ESB) Implementation

An Enterprise Service Bus (ESB) is an architectural pattern where a centralized software component performs integrations between applications. It acts as a central messaging hub, enabling asynchronous communication, data transformation, message routing, and protocol translation between disparate systems. By channeling all communication through the ESB, organizations can decouple applications, allowing legacy systems to interact with modern services without requiring direct, point-to-point connections.

This approach is a classic example of enterprise application integration best practices, providing a robust, manageable backbone for complex environments. For instance, MuleSoft's Anypoint Platform (often used as an ESB) allows enterprises like Salesforce to connect hundreds of on-premise and cloud applications. Similarly, IBM Integration Bus and TIBCO BusinessWorks have long powered transformations in finance and logistics by centralizing complex integration logic.

Key Implementation Steps

To successfully deploy an ESB, organizations must establish strong governance and operational practices to prevent it from becoming a monolithic bottleneck.

- Define Clear Governance Policies: Standardize message schemas, data formats (e.g., XML, JSON), and communication protocols across the enterprise. Strong governance ensures that as new services are added, they adhere to consistent integration patterns, reducing complexity and maintenance overhead.

- Implement Comprehensive Monitoring: Set up detailed logging and monitoring for all message flows, transformations, and endpoint connections. This visibility is critical for troubleshooting issues, identifying performance bottlenecks, and ensuring the reliability of business-critical integrations.

- Use Dead-Letter Queues (DLQs): Configure DLQs to capture messages that fail to be processed after multiple retries. This mechanism prevents data loss and allows administrators to analyze and reprocess failed transactions without disrupting the entire message flow.

- Design for Scalability and High Availability: Avoid a single point of failure by deploying the ESB in a clustered or high-availability configuration. Regularly conduct capacity planning to ensure the infrastructure can handle peak message loads and accommodate future growth. A hybrid approach combining an ESB with a modern API gateway can also balance centralized control with decentralized agility.

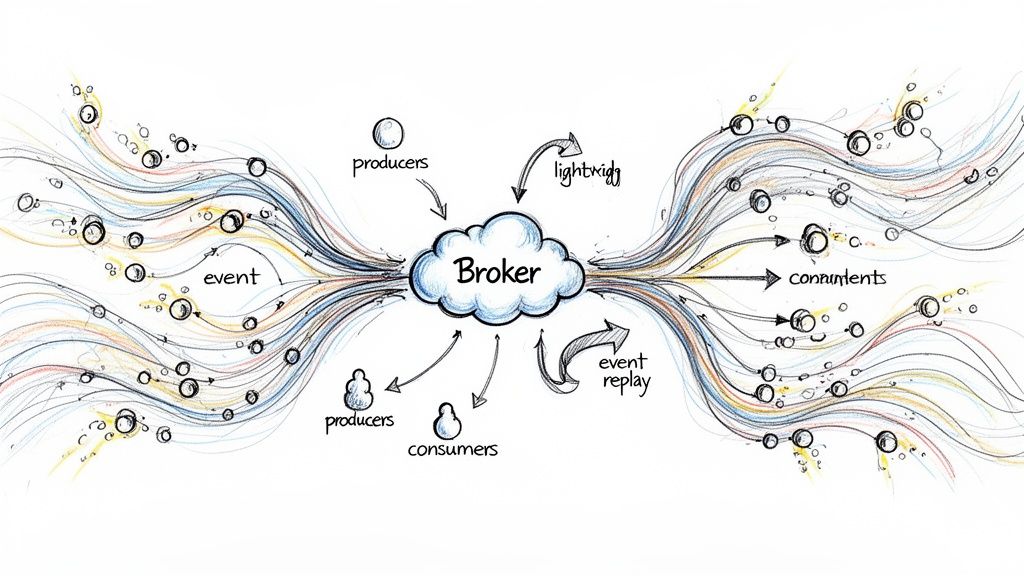

4. Event-Driven Architecture (EDA)

An Event-Driven Architecture (EDA) is a paradigm that promotes the production, detection, consumption of, and reaction to events. Instead of services making direct, synchronous requests to each other, they communicate asynchronously by publishing events to a central message broker or event stream. Other services subscribe to these events and react accordingly, creating a highly decoupled and scalable system where components can operate independently.

This approach is one of the most powerful enterprise application integration best practices for building responsive, real-time systems. For example, LinkedIn leverages EDA to update user feeds in real-time as connections post new content, and Uber uses it to coordinate complex ride logistics by publishing events that trigger downstream services for billing, driver matching, and notifications. This loose coupling enables services to be updated, deployed, and scaled without impacting the entire system.

Key Implementation Steps

Adopting an EDA requires careful planning around event design, data consistency, and operational management to unlock its full potential for enterprise integration.

- Choose the Right Event Broker: Select a technology that fits your use case. Use Apache Kafka for high-throughput, ordered event streaming and durability, or opt for a traditional message broker like RabbitMQ for complex routing and guaranteed delivery scenarios.

- Define Clear Event Schemas: Establish a standardized, version-controlled format for your events using schemas like Avro or Protobuf. This "event contract" ensures that producers and consumers can reliably communicate, even as the system evolves.

- Design for Idempotency and Failures: Consumers must be designed to be idempotent, meaning they can safely process the same event multiple times without causing duplicate transactions. Implement Dead Letter Queues (DLQs) to handle events that cannot be processed, preventing them from blocking the entire flow.

- Manage Distributed Transactions: Since direct calls are avoided, use patterns like the Saga pattern to manage data consistency across multiple services. A saga coordinates a sequence of local transactions, with compensating actions to roll back changes if a step fails.

5. Master Data Management (MDM)

Master Data Management (MDM) is a discipline that establishes a single, authoritative source of truth for an organization's most critical data assets, such as customer, product, employee, and location information. Instead of allowing siloed applications to maintain their own conflicting versions of data, MDM centralizes the process of creating, maintaining, and governing these "golden records." This ensures that when different systems communicate, they are all referencing the same consistent, high-quality information.

This approach is one of the most crucial enterprise application integration best practices because it eliminates data redundancy and inconsistency, which are primary causes of integration failure and flawed business intelligence. For example, a bank using MDM can ensure that a customer's updated address is propagated correctly across its retail banking, investment, and loan applications simultaneously. Leading platforms like Informatica MDM and SAP Master Data Governance provide the frameworks for large enterprises to achieve this unified data view, preventing costly errors and improving operational efficiency.

Key Implementation Steps

To successfully integrate an MDM strategy, organizations must focus on a structured rollout that combines technology with strong governance and clear processes.

- Prioritize a High-Impact Data Domain: Begin your MDM initiative by focusing on a single, critical data domain like "Customer" or "Product." This allows you to demonstrate value quickly, refine your processes, and secure buy-in before expanding to more complex domains.

- Establish Robust Data Governance: Define clear ownership and stewardship for master data. Create a governance council responsible for setting policies on data quality, access, and lifecycle management. This ensures accountability and maintains data integrity over time.

- Implement a "Golden Record" System: Use advanced data matching, cleansing, and fuzzy logic algorithms to identify and merge duplicate records from various source systems. This process creates a consolidated, authoritative "golden record" that serves as the single source of truth for all integrated applications.

- Create Data Quality Feedback Loops: Implement processes and tools that allow business users to identify and report data quality issues directly. This feedback should flow back to data stewards for correction, creating a continuous cycle of improvement that enhances data reliability across the enterprise.

6. Integration Platform as a Service (iPaaS)

Integration Platform as a Service (iPaaS) offers a cloud-based suite of tools that simplifies the process of connecting disparate applications, data sources, and services. It delivers a managed environment with pre-built connectors, low-code/no-code workflow builders, and data mapping capabilities, effectively abstracting away the complexities of underlying infrastructure. This allows organizations to build, deploy, and manage integration flows much faster than traditional, custom-coded approaches.

Adopting an iPaaS is a critical enterprise application integration best practice for organizations aiming for agility and cost-efficiency. Platforms like Boomi AtomSphere and MuleSoft Anypoint Platform enable complex hybrid integrations, connecting on-premise legacy systems with modern cloud services. Similarly, Workato and Zapier empower both IT professionals and "citizen integrators" to automate workflows between thousands of SaaS applications, drastically reducing manual effort and accelerating business processes without extensive development cycles.

Key Implementation Steps

To leverage an iPaaS solution effectively, organizations should adopt a strategic approach that maximizes its value while mitigating potential risks.

- Evaluate Pre-Built Connectors: Before committing to a platform, thoroughly assess its library of pre-built connectors for your critical applications (e.g., Salesforce, SAP, NetSuite). Prioritizing solutions with robust, out-of-the-box support for your tech stack minimizes the need for costly and time-consuming custom development.

- Start with Pilot Projects: Begin with a small-scale, non-critical pilot project to validate the iPaaS platform's suitability for your specific needs. This allows your team to gain hands-on experience, understand the platform's limitations, and refine your integration strategy before a full-scale rollout.

- Establish Strong Governance: Implement clear governance policies for your iPaaS environment. Define who can create, modify, and deploy integration flows, establish naming conventions, and create a process for documenting all transformation logic to maintain control and ensure consistency as usage grows.

- Plan for Scalability and Cost: Carefully analyze the platform's pricing model in relation to your projected data volumes and transaction frequencies. Choose a model that aligns with your growth strategy to avoid unexpected costs, and ensure the platform can handle future performance requirements.

7. Data Integration and ETL Best Practices

Effective data integration is the process of moving and preparing data for analytics, reporting, and operational use cases. It involves processes like ETL (Extract, Transform, Load), its modern counterpart ELT (Extract, Load, Transform), and real-time streaming pipelines. These practices ensure that clean, consistent, and reliable data flows from various source systems into centralized repositories like data warehouses or data lakes, making it available for decision-making and data-driven applications.

This discipline is a critical pillar of enterprise application integration best practices because it directly impacts the quality and timeliness of business intelligence. For example, Netflix built its entire real-time analytics platform on Kafka-based data pipelines to analyze user interactions instantly, while Airbnb open-sourced Apache Airflow to orchestrate its complex data workflows. These companies demonstrate that robust data integration is essential for turning raw operational data into a strategic asset.

Key Implementation Steps

To build resilient and scalable data pipelines, organizations should adopt a structured approach focused on automation, quality, and performance.

- Orchestrate and Version Control Workflows: Use tools like Apache Airflow or dbt to define, schedule, and monitor complex data pipelines as code. Store all transformation logic in a version control system like Git to enable collaboration, track changes, and support CI/CD practices for your data pipelines.

- Implement Rigorous Data Quality Checks: Integrate automated data quality tests at every stage of your pipeline, from extraction to loading. Check for null values, duplicates, format inconsistencies, and business rule violations to prevent "garbage in, garbage out" scenarios and build trust in your data.

- Design for Idempotency and Fault Tolerance: Ensure your data pipelines are idempotent, meaning they can be re-run multiple times without creating duplicate data or causing side effects. This is crucial for safely recovering from failures. Implement robust error alerting and automated recovery mechanisms to maintain pipeline reliability.

- Optimize for Performance and Freshness: Use incremental loading strategies to process only new or changed data, significantly reducing processing time and cost. Monitor key pipeline metrics like data latency and job duration to ensure data freshness meets business requirements and to identify performance bottlenecks.

8. Container Orchestration and Kubernetes for Integration

Container orchestration platforms like Kubernetes automate the deployment, scaling, and management of containerized applications, including the microservices and middleware that drive modern integration. By packaging integration components into lightweight, portable containers, organizations can deploy them consistently across any environment, from on-premise data centers to public clouds. Kubernetes then manages the entire lifecycle of these containers, ensuring high availability, resilience, and efficient resource utilization.

This approach is one of the most critical enterprise application integration best practices for building scalable and fault-tolerant systems. Instead of deploying integration logic on monolithic virtual machines, Kubernetes allows each component to run as an independent, isolated service. For example, Shopify leverages Kubernetes to run thousands of microservices at massive scale, ensuring its e-commerce platform remains responsive and reliable even during peak traffic events like Black Friday. This model provides the operational agility needed to manage complex, distributed integration workloads effectively.

Key Implementation Steps

To leverage container orchestration for integration, organizations should adopt a structured approach that simplifies management and maximizes the benefits of the platform.

- Start with Managed Services: Begin your journey with managed Kubernetes offerings like Amazon EKS, Google Kubernetes Engine (GKE), or Azure Kubernetes Service (AKS). These services handle the operational overhead of managing the Kubernetes control plane, allowing your team to focus on deploying and managing integration services rather than infrastructure.

- Use Helm for Application Management: Adopt Helm charts to template, manage, and deploy your integration applications onto Kubernetes. Helm simplifies the process of configuring complex deployments, managing dependencies, and performing version-controlled upgrades or rollbacks, bringing consistency to your release process.

- Implement Robust Observability: Integrate comprehensive logging and monitoring tools like Prometheus for metrics and the ELK Stack (Elasticsearch, Logstash, Kibana) for logs. Centralized observability is essential for troubleshooting distributed integration flows and monitoring the health and performance of your containerized services.

- Automate Deployments with GitOps: Use GitOps tools such as ArgoCD or Flux to automate your deployment pipelines. This practice uses a Git repository as the single source of truth for your desired application state, enabling fully automated, auditable, and reliable deployments to your Kubernetes clusters.

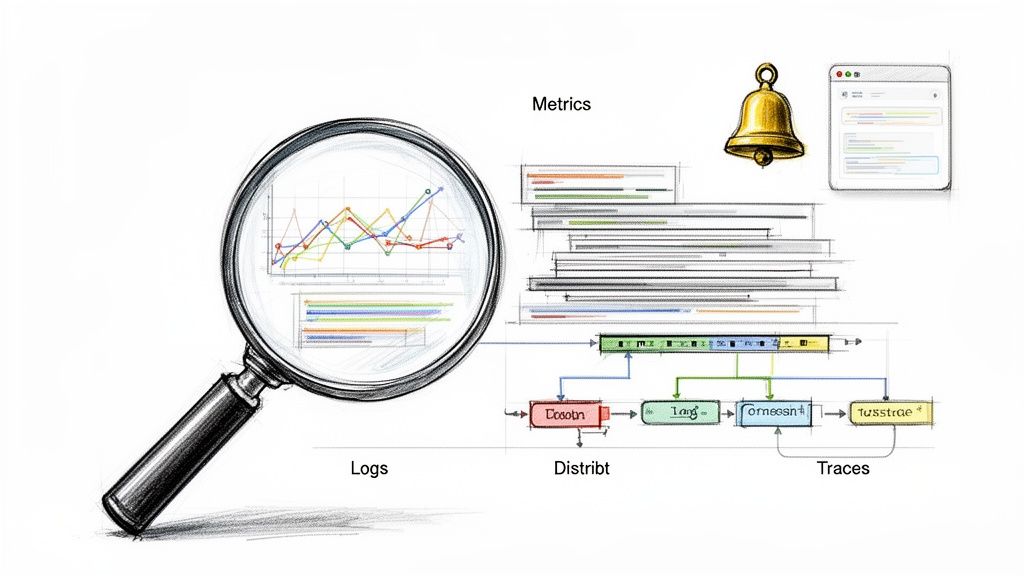

9. Monitoring, Logging, and Observability

In a complex integrated environment, simply knowing if a service is "up" or "down" is insufficient. Observability goes beyond traditional monitoring by combining logs, metrics, and distributed tracing to provide a deep, holistic understanding of a system's internal state. This practice allows engineering teams to not only detect problems but also to ask new questions and understand the "why" behind unexpected behavior, making it a critical component of modern enterprise application integration best practices.

This approach shifts the focus from reactive problem-solving to proactive incident management and system optimization. For instance, Netflix's Atlas platform processes billions of time-series metrics to provide real-time operational insights, while Uber's observability stack handles trillions of events daily to manage its massive distributed architecture. These systems are essential for maintaining reliability and performance at scale by providing granular visibility into every transaction and service interaction.

Key Implementation Steps

To build a robust observability framework, organizations must integrate tools and processes that provide comprehensive insights into their integration landscape.

- Implement Structured Logging: Standardize log formats across all applications using structured data like JSON. This consistency allows for efficient parsing, querying, and analysis in centralized aggregation platforms like the ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk.

- Embrace Distributed Tracing: In microservices architectures, use tools like Jaeger or Zipkin to trace requests as they travel across multiple services. This creates a complete picture of a transaction's lifecycle, making it possible to pinpoint latency bottlenecks and failure points that are otherwise invisible.

- Define Meaningful Metrics and SLOs: Move beyond basic CPU and memory metrics. Define Service Level Objectives (SLOs) tied to key business outcomes, such as transaction success rate or API response time. Use these SLOs to configure proactive alerts that notify teams before a minor issue impacts end-users.

- Correlate All Telemetry Data: The true power of observability comes from correlating logs, metrics, and traces. Utilize Application Performance Monitoring (APM) tools like Datadog or New Relic to link a spike in latency (metric) to a specific slow database query (trace) and the corresponding error messages (logs), dramatically reducing mean time to resolution (MTTR).

10. Security, Governance, and Data Privacy in Integration

Integrating applications inevitably involves moving sensitive data across system boundaries, making security, governance, and privacy a non-negotiable foundation. This practice involves embedding robust controls directly into the integration fabric to protect data in transit and at rest, enforce access policies, and ensure compliance with regulations like GDPR, HIPAA, and SOC 2. It treats security not as a final checkpoint but as an integral part of the integration lifecycle, from design to deployment.

This holistic approach is a critical enterprise application integration best practice because a single vulnerability can compromise entire systems. For instance, financial institutions use API gateways to enforce strict OAuth 2.0 authentication and authorization for all payment processing integrations, while healthcare providers implement field-level access controls to protect patient data in compliance with HIPAA. These measures build trust, mitigate risk, and ensure that integrations are both functional and secure.

Key Implementation Steps

To effectively integrate security and governance, organizations must adopt a multi-layered strategy that addresses technical, procedural, and regulatory requirements.

- Implement Modern Authentication and Authorization: Use industry-standard protocols like OAuth 2.0 and OpenID Connect (OIDC) for delegated access and secure authentication. For stateless communication common in microservices, leverage JSON Web Tokens (JWT) and implement a strict key rotation policy.

- Centralize Policy Enforcement with an API Gateway: Deploy an API gateway to act as a single entry point for all integrated services. Use it to centralize security policies, enforce rate limiting to prevent DDoS attacks, manage API keys, and log all access attempts for auditing purposes.

- Embed Privacy by Design: Proactively build privacy controls into your integration architecture. This includes maintaining detailed data lineage records, enforcing data retention policies, and implementing consent management. To establish robust security and compliance, it's also crucial to consider regulatory frameworks, which involves understanding the intersection of ISO 27001 and data privacy laws.

- Adopt a Shift-Left Security Mindset: Integrate security practices early in the development lifecycle rather than waiting for pre-production audits. By incorporating security from the initial design phase, teams can identify and remediate vulnerabilities more efficiently. You can learn more about this proactive approach in our guide to shift-left security.

Enterprise Application Integration Best Practices — 10-Point Comparison

| Integration Pattern | 🔄 Implementation complexity | ⚡ Resource requirements | 📊 Expected outcomes | 💡 Ideal use cases | ⭐ Key advantages |

|---|---|---|---|---|---|

| API-First Architecture and Design | Medium — requires upfront contract design and versioning | Moderate — API gateways, docs, SDKs, dev effort | Reusable APIs, parallel dev, multi-client support | Multi-platform products, third-party integrations, SDK ecosystems | ⭐⭐⭐⭐ Decoupling, scalability, easier testing |

| Microservices Architecture Pattern | High — service decomposition, orchestration, DDD required | High — CI/CD, containers, monitoring, SRE teams | Independent deploys, scalable services, fault isolation | Large-scale apps, rapid iteration, distributed teams | ⭐⭐⭐⭐ Independent scaling, resilience, team autonomy |

| Enterprise Service Bus (ESB) Implementation | Medium–High — central routing, governance and orchestration | Moderate–High — messaging infra, operational overhead, possible licensing | Centralized integration, protocol translation, reliable delivery | Integrating legacy systems with modern apps, heterogeneous protocols | ⭐⭐⭐ Centralized control, legacy support, guaranteed delivery |

| Event-Driven Architecture (EDA) | High — event modeling, schema/versioning, broker ops | High — event brokers (Kafka), storage, traceability tooling | Real-time processing, high throughput, loose coupling | Real-time feeds, streaming analytics, reactive systems | ⭐⭐⭐⭐ Scalability, responsiveness, event audit trails |

| Master Data Management (MDM) | High — governance, data modeling, organizational change | High — MDM tools, data stewards, ongoing operations | Single source of truth, improved data quality and compliance | Enterprises with many data sources, regulated industries | ⭐⭐⭐⭐ Consistent master data, compliance, better analytics |

| Integration Platform as a Service (iPaaS) | Low–Medium — low-code connectors, less custom infra | Low — managed cloud, pre-built connectors, minimal ops | Rapid integrations, lower TCO, faster time-to-value | SMEs, SaaS integrations, quick prototyping | ⭐⭐⭐ Fast deployment, ease of use, reduced infra burden |

| Data Integration and ETL Best Practices | Medium–High — pipeline design, testing, schema management | Moderate — orchestration tools, storage, data engineers | Clean analytics-ready data, historical records, compliance support | BI, data warehouses/lakes, reporting and analytics | ⭐⭐⭐ Reliable data pipelines, improved data quality |

| Container Orchestration & Kubernetes | High — cluster ops, networking, storage complexity | High — infra, monitoring, operator expertise | Resilient, scalable deployments, standardized operations | Containerized microservices, integration services at scale | ⭐⭐⭐⭐ Automation, portability, high availability |

| Monitoring, Logging, and Observability | Medium — instrumentation, correlation across systems | Moderate — telemetry storage, APM/ELK tools, SRE support | Faster incident diagnosis, proactive alerts, capacity planning | Production systems, distributed microservices, SLAs | ⭐⭐⭐⭐ Reduced MTTR, operational insights, SLA tracking |

| Security, Governance, and Data Privacy in Integration | High — auth, compliance frameworks, policy enforcement | High — security tooling, audits, legal/compliance resources | Reduced breach risk, regulatory compliance, auditability | Regulated industries (healthcare, finance), customer-data systems | ⭐⭐⭐⭐ Data protection, compliance, fine-grained access control |

Turning Integration Strategy into Your Competitive Edge

Mastering enterprise application integration is not a destination; it's a continuous, strategic journey. The best practices detailed throughout this guide represent more than just technical checklists. They are the foundational pillars of a modern, agile, and resilient enterprise architecture. Moving beyond the chaotic, point-to-point integrations of the past, these principles empower you to build a truly interconnected digital ecosystem.

By thoughtfully implementing these enterprise application integration best practices, you transform your IT landscape from a collection of siloed systems into a cohesive, strategic asset. This unlocks the agility needed to pivot with market demands, the scalability to support growth, and the data-driven insights required for genuine innovation. The goal is no longer just to make applications talk to each other; it's to create a synergistic environment where the whole is far greater than the sum of its parts.

Recapping the Core Pillars of EAI Excellence

The journey to integration maturity rests on several critical concepts we've explored. Let's revisit the most impactful takeaways:

- Architectural Intentionality: Your choice between API-first design, microservices, a modernized ESB, or an event-driven architecture is not arbitrary. It must be a deliberate decision aligned with your organization's specific needs for scalability, real-time processing, and decoupling.

- Data as the Linchpin: Effective integration is impossible without a coherent data strategy. Implementing Master Data Management (MDM) and robust ETL/ELT processes ensures that the data flowing between systems is consistent, accurate, and trustworthy, preventing the "garbage in, garbage out" dilemma.

- The Power of Modern Platforms: Leveraging tools like Integration Platform as a Service (iPaaS) and container orchestration with Kubernetes is no longer optional for complex environments. These platforms provide the abstraction, automation, and scalability necessary to manage intricate integration workflows without overwhelming your teams.

- Non-Negotiable Guardrails: In today's landscape, security, governance, and observability are not afterthoughts. They must be woven into the fabric of your integration strategy from day one to protect sensitive data, ensure compliance, and provide the visibility needed to troubleshoot and optimize performance.

Key Insight: Successful EAI is less about choosing a single "best" tool and more about cultivating an integration-centric mindset. It involves building a culture of collaboration, strategic planning, and continuous improvement across development, operations, and business teams.

Your Actionable Path Forward

Translating these concepts from theory into practice requires a clear, iterative plan. Don't attempt a "big bang" overhaul. Instead, focus on incremental progress that delivers tangible value.

- Assess and Audit: Begin by mapping your current integration landscape. Identify the most critical, brittle, or inefficient connections. Use this audit to pinpoint the areas where implementing one of these best practices will yield the highest immediate return on investment.

- Launch a Pilot Project: Select a well-defined, low-risk project to pilot a new approach. This could involve refactoring a single monolithic application into a few microservices, implementing an iPaaS for a new SaaS application, or establishing a robust monitoring dashboard for a critical data pipeline.

- Establish a Center of Excellence (CoE): Create a dedicated team or a cross-functional working group responsible for defining and evangelizing enterprise application integration best practices. This group will create reusable assets, provide guidance, and ensure consistency across all new integration projects.

- Seek Strategic Partnerships: The complexity of modern integration can be daunting. Partnering with specialists, such as a nearshore development agency, can provide the specific expertise and additional bandwidth needed to accelerate your initiatives. This approach allows you to tap into seasoned architects and engineers who have already navigated these challenges, avoiding common pitfalls and fast-tracking your path to success.

Ultimately, the goal is to transform integration from a recurring technical hurdle into a powerful engine for business growth. By embracing these best practices, you are not just connecting systems; you are building a future-proof foundation that enables your organization to innovate faster, make smarter decisions, and deliver superior customer experiences. Your integration strategy is your competitive edge.